With each release of Exchange, we have seen a substantial shift in the way it required load balancers to be configured. For example, between Exchange 2010 and 2013, the requirement for session affinity was dropped. This allowed multiple requests from a single client to take different paths to its mailbox. It no longer mattered which client access servers in a site were involved in the session. This was a contrast to 2010 where a client session had to maintain a single path at all times. Ross Smith covers this in greater detail here.

Exchange 2013 also dropped support for traditional RPC connections. All client connections to Exchange 2013 were flipped to RPC over HTTP. Service Pack 1 saw another shift in client connectivity as it introduced the option of MAPI over HTTP (although disabled by default). This faster, leaner protocol allowed the Exchange Team to develop shorter failover times between servers. It introduced another virtual directory into the mix–the MAPI virtual directory–which would need to be load balanced as well.

Unlike its predecessor, Exchange 2016 did not see a shift in client connectivity. With Exchange 2016 an organization can choose between MAPI over HTTP, or, RPC over HTTP (although the former is now preferred).

Knowing the nuances between each version of Exchange can be daunting. Equally daunting is the configuration of the load balancer itself. But it doesn’t have to be.

A good vendor will give you instructions on load balancing Exchange.

A great vendor will give you a template to automate setup.

Which is lucky because this article is about a great vendor.

In this article, we configure the Kemp load balancer to provide high availability for Exchange 2016. If you don’t have a load balancer you can download one for free from Kemp. Kemp’s free appliance is what we will use in this guide.

Don’t worry. Despite the focus being on Kemp, you can translate these principles to any vendor.

Let’s get started!

Disclaimer: I need to point out that I am not sponsored by Kemp in any way. However, this document does contain some affiliate links.

The environment

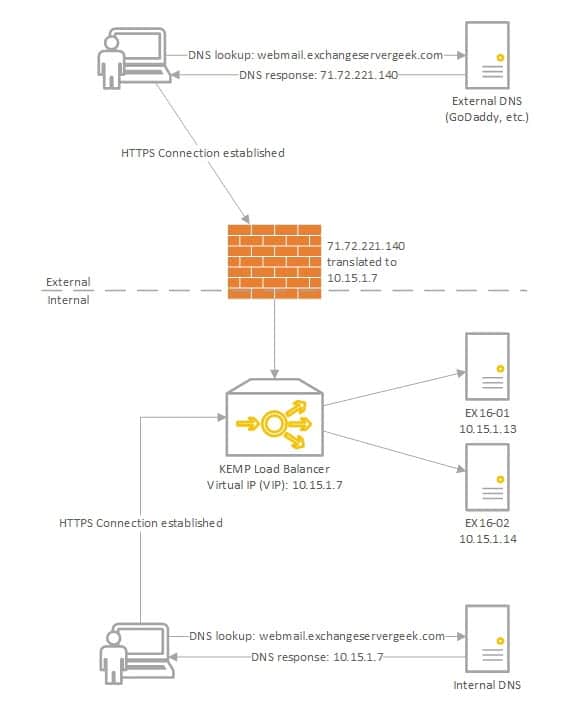

In our example below we plan to have two Exchange 2016 servers behind a load balancer in a single site; EX16-01 and EX16-02.

The Kemp load balancer will be a virtual load balancer running on ESXi 5.5. We have already deployed the Kemp image to a virtual machine, run through the initial welcome screens and assigned a management IP. Aside from this very basic configuration, no load balancing has been configured yet.

A third party certificate containing all the entries in our namespace exists on EX16-01 and EX16-02; webmail.exchangeservergeek.com & autodiscover.exchangeservergeek.com.

All Exchange URLs will use routable top-level domains. This means we will use split-DNS. Our external DNS provider will resolve webmail.exchangeservergeek.com to 7172.

Note: This article will also work for Exchange 2013.

Luke, use the templates!

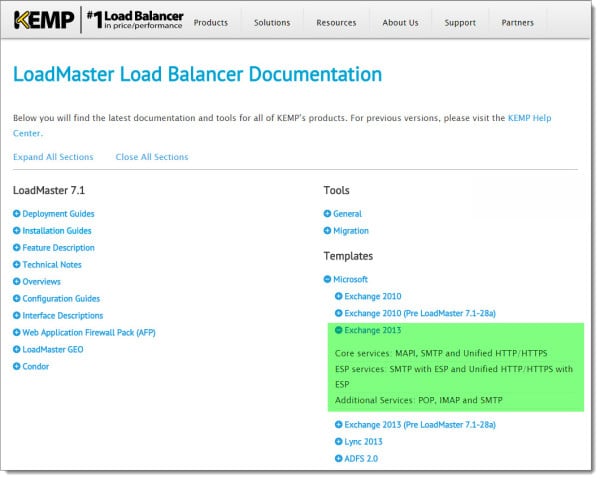

Kemp provides templates for the configuration of is load balancers. These templates cover many technologies including Exchange. These templates give you a tremendous head start in configuring your load balancer. In turn, that head start will save you a tremendous amount of time. More importantly, it eliminates a lot of room for human error. It really doesn’t take much effort to get a Kemp load balancer configured for Exchange. In this article, we explore the configuration of a Kemp load balancer using its 2013 templates (these templates work for 2016).

To download the Exchange templates visit https://kemptechnologies.com/loadMaster-documentation/.

Kemp offers three distinctive templates.

- Core Services: This is the base template for all Exchange HTTPS services.

- ESP Services: The Edge Security Pack (ESP) is a template for advanced authentication needs. One such benefit of ESP is pre-auth for clients. This allows the load balancer to take the brunt of any brute force attacks, passing only legitimate requests to the Exchange Servers. A great alternative if you had previously been using Microsoft Threat Management Gateway (TMG).

- Additional Services: If you plan to offer POP or IMAP then I recommend snagging this template as well.

For this guide, I am going to download just the Core Services template pack.

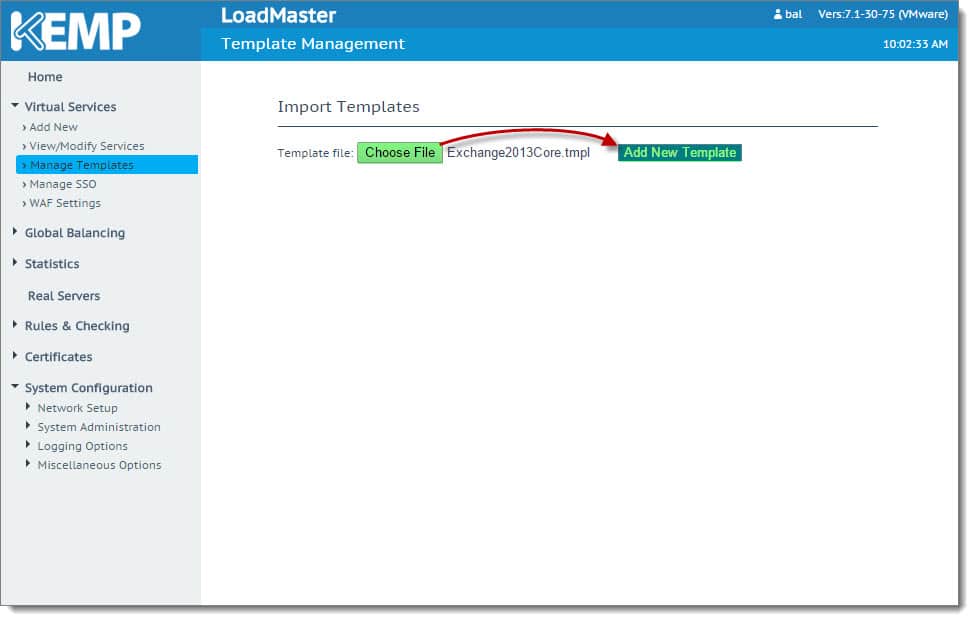

To import a template, expand Virtual Services and select Manage Templates. Click the Choose File button and locate your template. Click Add New Template.

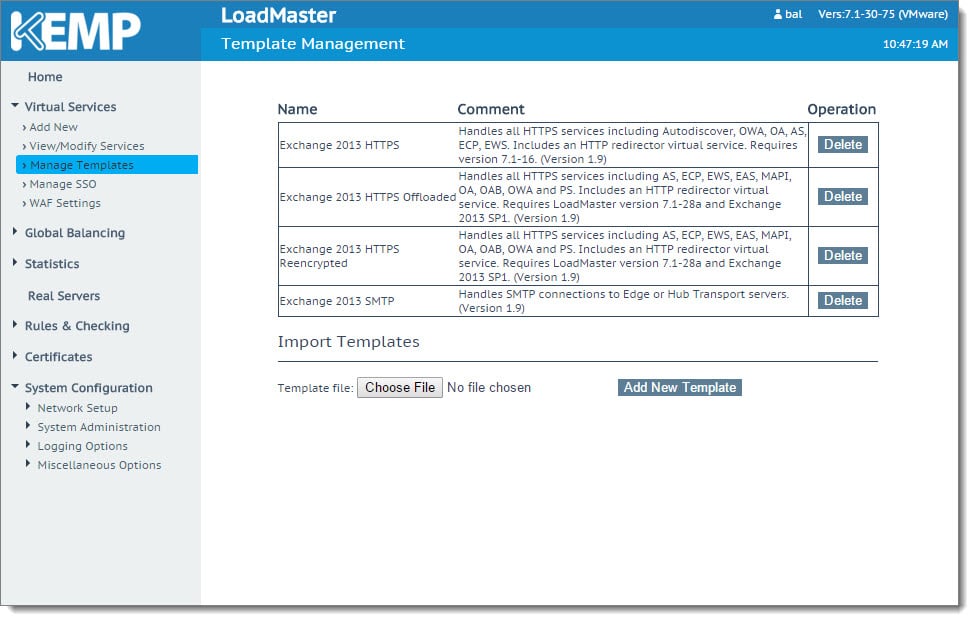

You will receive a confirmation that the templates have been installed. Click Ok. The screen will refresh showing the newly installed templates. You can use this screen to delete templates should you wish.

Repeat this process for any additional templates you need.

Creating the Virtual IP (VIP)

With our templates installed we can move on to creating our Virtual IP (VIP) for Exchange. The IP we choose for our VIP must not be used anywhere else in the environment. The Virtual IP will represent all client access servers in our site. Using the environment diagram above our VIP will be 10.15.1.7.

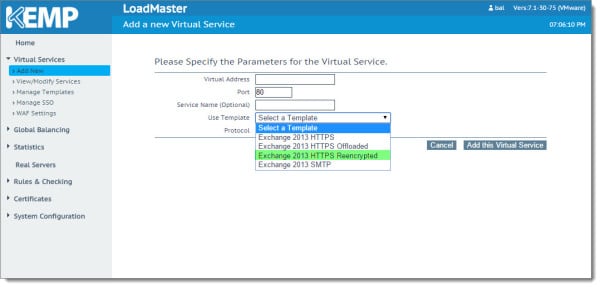

To create a new Virtual IP expand Virtual Services and select Add New. From the Use Template drop-down, pick the template you wish to use.

Which template you pick depends on your business needs.

- HTTPS Offloaded: The key benefit to SSL offloading is that the load balancer takes over the responsibility of decrypting all secure communications instead of the Exchange Servers. SSL decryption adds processor cycles to the Exchange servers so shifting this workload to a virtual machine or hardware appliance is a nice option. To put the security hat on for a moment, it does mean communication between the load balancer and Exchange servers is not encrypted. This presents a potentially larger security issue if you are using one-arm load balancing where all clients, servers and the load balancer are on the same subnet. There is also additional configuration you need to perform on the Exchange side (which you repeat after each Cumulative Update as the settings are not retained). However, these steps can be scripted.

- HTTPS Reencypted: Similar to SSL offloading, SSL bridging decrypts and examines the packets. Both technologies then use content rules to match the packet to a virtual directory and then deliver that traffic to a server where that virtual directory is marked healthy. The difference between SSL offloading and SSL bridging is that bridging re-encrypts the packet before it sends it on. This is much more secure than offloading. The other benefit is that no additional configuration changes have to be made to Exchange.

- HTTPS: This template is the simplest of all solutions as all SSL acceleration components are disabled. This means SSL decryption is not taking place at the load balancer. Which in turn means there are no sub-virtual servers for each virtual directory. At this level, only a single health check can be performed. Most often administrators pick the OWA virtual directory as a litmus for the entire server. The problem here is when using only one health probe the load balancer has no insight into the health of the other Exchange virtual directories. Should the OWA health probe fail the entire server will be written off, even if the other virtual directories are still functional. Similarly, should the OWA health check report good but OAB virtual directory fail client requests for the OAB will still be sent to that server. It is possible to get around this. But that would require separate namespaces for each virtual directory with a corresponding Virtual Server (and IP) for each. And where is the fun in that? If you want more comprehensive health checking without the complexity of multiple VIPs I recommend either of the first two options.

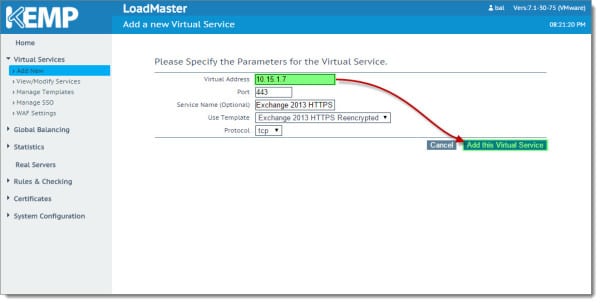

For this guide, I will be using the HTTP Reencrypted template. I prefer this load balancing method over the others. It offers considerably more insight into the health of the servers without sacrificing security. This is especially true because my lab is a single subnet so I will be using a one-arm configuration for my load balancer. All clients, servers and the load balancer will sit on the same subnet.

Once we select the template it automatically populates in Port and Protocol fields. It also suggests a Service Name. You can make the service name whatever you desire. You will still need to enter a Virtual Address manually. Based on our environment diagram this will be 10.15.1.7. Once complete click Add this Virtual Service.

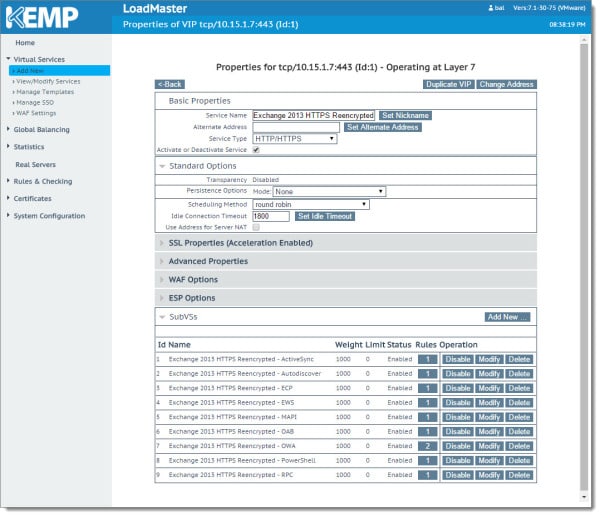

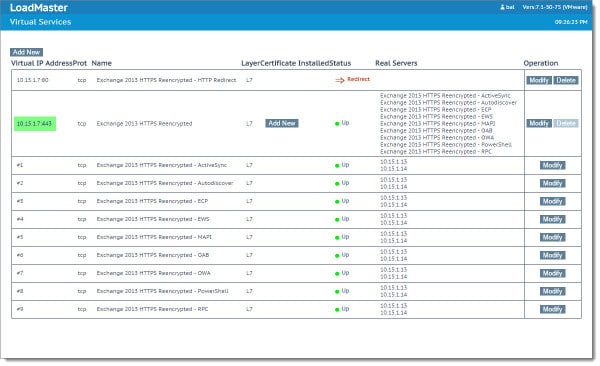

This brings us to the properties screen for the virtual service. This is where the awesome sauce of the template really kicks in. All the hard work has already been done for us, including the creation of nine sub-Virtual Services, each corresponding to an Exchange virtual directory.

In addition, this template has configured our SSL acceleration settings and a port 80 redirect. Any traffic coming into the Virtual IP on port 80 will be redirected to port 443. You can expand each section to see the options the template has configured.

Configuring SubVS

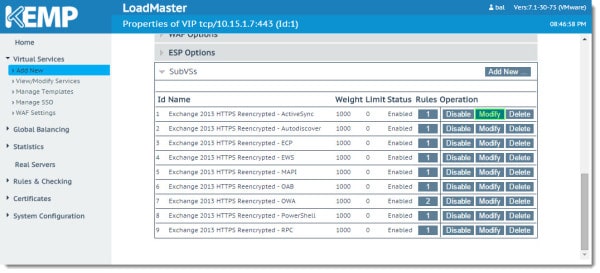

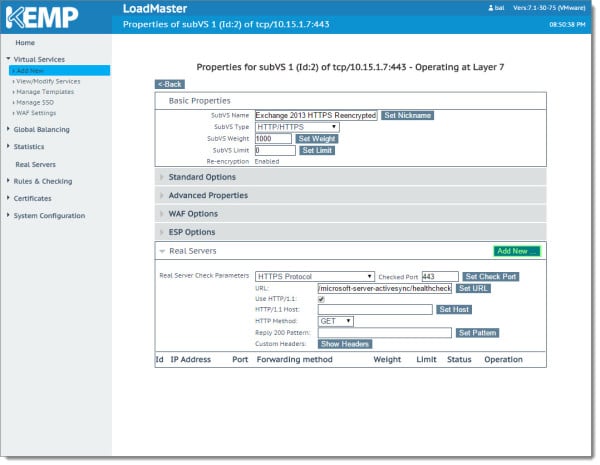

Let’s continue by modifying the first SubVS. In the first row select Modify. In our lab this is ‘Exchange 2013 HTTPS Reencrypted – ActiveSync’.

Similar to how the Virtual Service was configured by the template so is the SubVS. Under the Real Servers section, you will notice that the health check URL settings for this particular virtual directory have already been configured. All we need to do on this screen is to add our Exchange servers. To do this click the Add New… button.

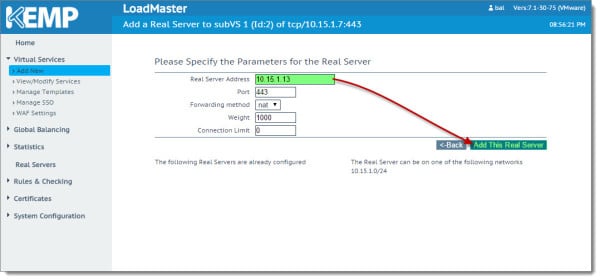

This brings up the Parameters for the Real Server screen. The template has already filled out most of these fields for us. All we need to add here is the IP address of our first Exchange server in the Real Server Address field. Then click the Add This Real Server button. In our lab, our first server is 10.15.1.13.

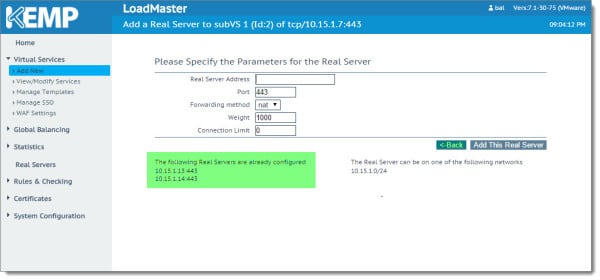

You will receive a confirmation that the server was successfully added. As each server is added it will be displayed under ‘The Following Real Servers Are Already Configured’ section. Repeat this process for all additional Exchange servers in your site. In our lab, we just have one additional server to add; 10.15.1.14. When complete click the Back button.

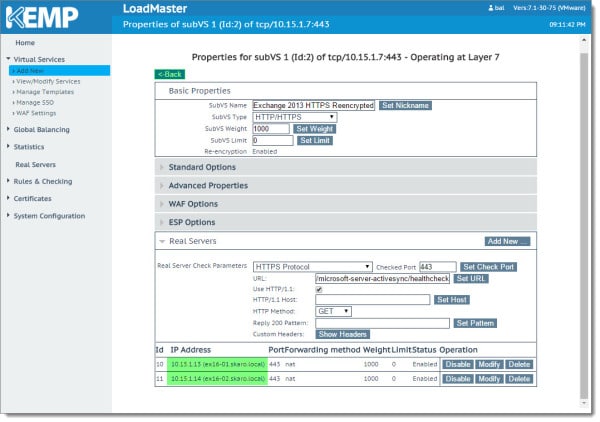

You will notice the servers now listed at the bottom of the SubVS page. Click Modify to make corrections to a server. Click Delete to remove the server. You can also administratively disable a server from just that SubVS with the Disable button. When ready click the Back button.

You will need to repeat these steps on the remaining eight SubVSs. Once all nine are complete click the Back button.

Adding our Exchange Certificate

The end result should be all green. If you select the IP:Port link under the Virtual IP Address column it will break the status down by virtual directory.

Now we need to add our Exchange certificate to the load balancer. This is necessary so the load balancer can decrypt the packets with the Exchange server’s private key. To do this click the Add New button under the Certificate Installed column.

Tip: For instructions on exporting certificates from Exchange check this article.

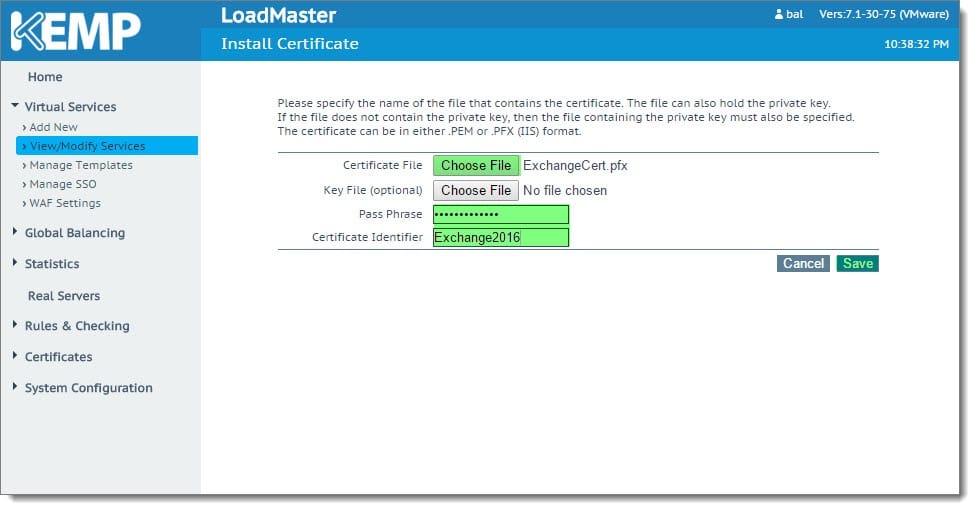

Click Import Certificate.

Next to Certificate File, click the Choose File button. Select your certificate file and click Open. In the Pass Phrase field specify the password you used when you exported the certificate. Specify a Certificate ID to identify the certificate. Click Save.

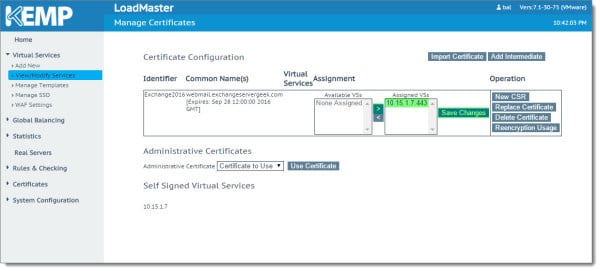

On the Certificate Configuration screen, select the virtual IP in the Available VSs box and click the right arrow. This will shift the Virtual IP (VIP) to the Assigned VSs box. Click Save Changes.

This will take us back to the View/Modify Services page. We can now see that the Exchange certificate has been applied to our virtual service (VIP).

Checking our work

Now that we have everything configured let’s check our work. First, double-check with PING or NSLOOKUP that your namespace is resolving to the new virtual IP (VIP). If it is, great! In our case, our namespace resolves to 10.15.1.7. If not, double-check your DNS entries for your Exchange URLs. You may also need to clear your DNS cache.

Next, point your browser to your OWA namespace and log in. In our case this is https://webmail.exchangeservergeek.com/owa. If this logs in that is a really good sign. But let’s see what is really going on under the hood.

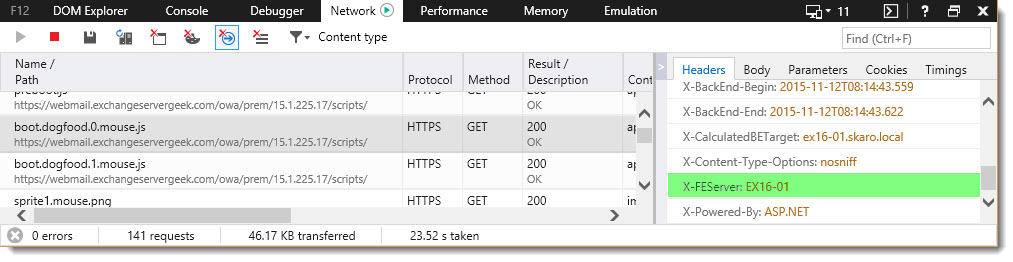

If you are using Internet Explorer hit the F12 key to bring up the developer tools. Select the Network tab. Under this tab, you should see a list of files that have been retrieved from your email servers. If you don’t see any files, reload the page while keeping the developer tools active.

In the screenshot below you can see our browser has fetched a number of javascript and image files from https://webmail.exchangeservergeek.com. If we select one of those files we can examine the response header to the right. If we scroll to the bottom we should see a parameter named X-FEServer (Front-End Server). From the screenshot, you can see that sprite1.mouse.png was delivered by EX16-02.

Let’s check another file; boot.dogfood.0.mouse.js. In this case, we can see the front end server was EX16-01. This is also a great example of how session affinity is no longer in the mix.

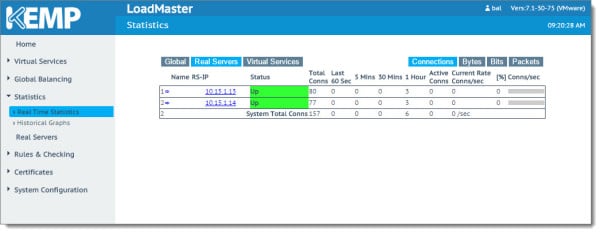

The Kemp Load Balancer also has some great real-time statistics. If you navigate to Statistics >> Real-Time Statistics and select Real Servers you can see how connections are currently being distributed.

You are all set!

This wraps up load balancing Exchange HTTPS services. In a future article, we’ll explore using Kemp to load balance SMTP traffic. Also, I recommend checking out our other Exchange 2016 articles below.